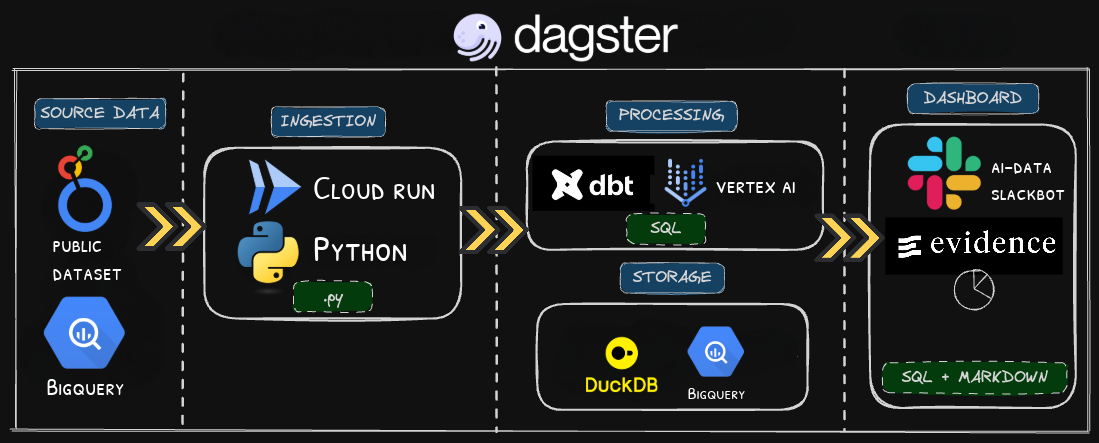

I built a full Machine Learning pipeline of a product dataset as an exercise with modern orchestration frameworks including Dagster, Cloud Run and Vertex AI.

Components

I used Dagster as the DAG framework, and the various components in the stack include:

- BigQuery for Source data - I use a public dataset on BigQuery with dbt.

- DuckDB and evidence.dev - I use DuckDB as the data backend for the Evidence.dev dashboard to minimize query time.

- Gemini integration - Calls the Vertex AI API to generate AI highlights for trends in the data.

Notes

Each individual module can be run manually for testing. Right now, the project is deployed on GitHub Actions on the first day of each month.